10 Validity Argument

The Dynamic Learning Maps® (DLM®) Alternate Assessment System is based on the core belief that all students should have access to challenging, grade-level academic content. Therefore, DLM assessments provide students with the most significant cognitive disabilities the opportunity to demonstrate what they know and can do.

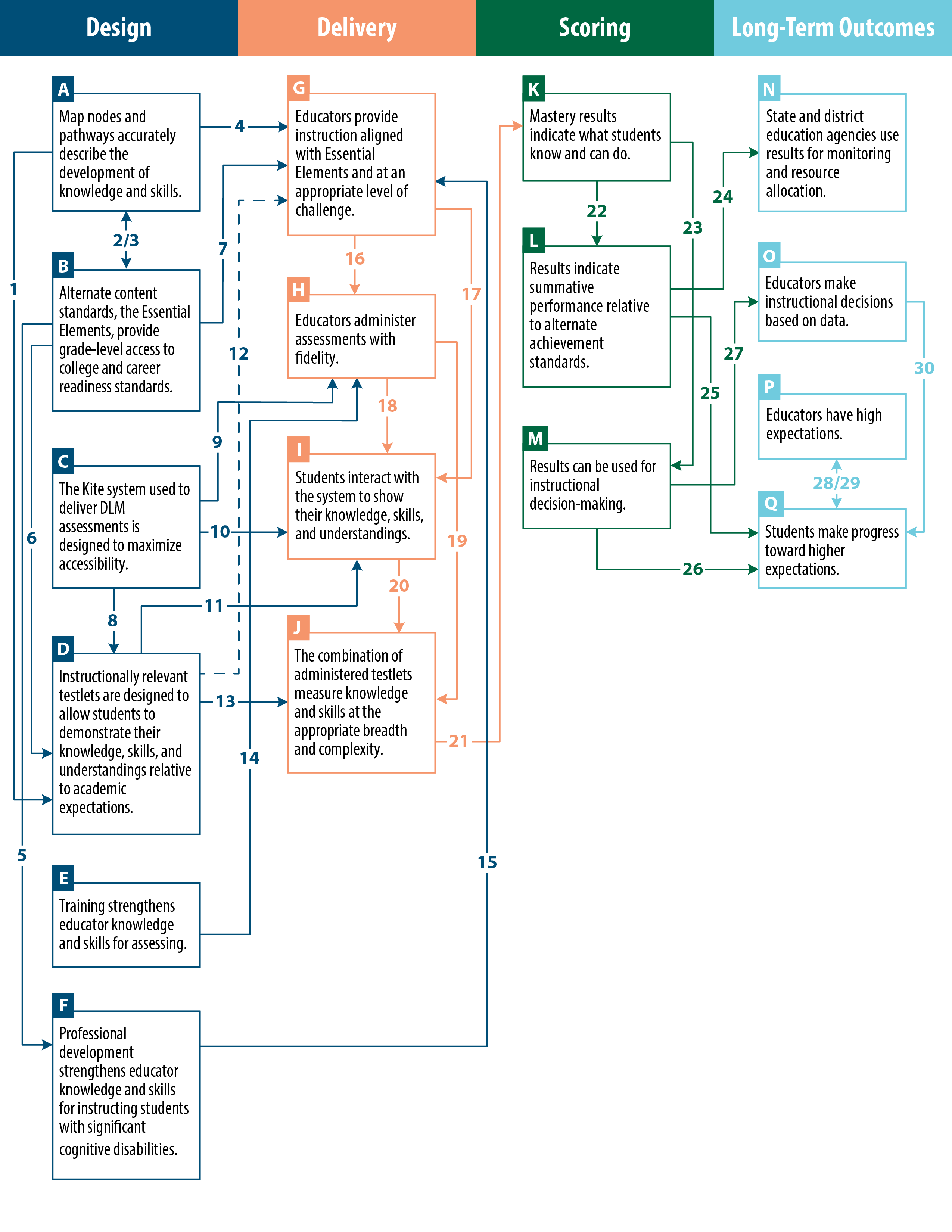

The DLM program adopted an argument-based approach to assessment validation. We adopted a three-tiered approach to validation, which included specification of 1) a Theory of Action (see Figure 10.1) with defining statements in the validity argument that must be in place to achieve the goals of the system and the chain of reasoning between those statements; 2) an interpretive argument defining propositions that must be examined to evaluate each component of the Theory of Action; and 3) validity studies to evaluate each proposition in the interpretive argument. For a complete description of the development process, see the 2021–2022 Technical Manual—Year-End Model (Dynamic Learning Maps Consortium, 2022).

Figure 10.1: DLM Theory of Action

As part of the DLM program’s commitment to continuous improvement, each year, additional evidence is collected to evaluate the extent to which results support intended uses. The amount and type of evidence necessary to support a claim depends on factors such as the type or importance of the claim and prior evidence (Chapelle, 2021) as well as feasibility of collecting the evidence (American Educational Research Association et al., 2014). A strong logical argument for a claim can reduce the need for empirical evidence (Kane, 2013). As such, the DLM program considers the body of prior evidence, strength of the existing argument, and feasibility of collecting additional evidence when prioritizing validity studies for each operational administration year.

The 2021–2022 Technical Manual describes the DLM Theory of Action and comprehensive validity evidence collected from the inception of the DLM program in 2010 through the 2021–2022 administration year. This chapter describes additional evidence collected in 2022–2023. The chapter concludes with a synthesis of validity evidence collected to date for each statement in the Theory of Action to evaluate the extent that results reflect their intended meaning and can be used for their intended purposes. Given the limited changes to the DLM operational system in 2022–2023, most evidence described in this update is empirical rather than procedural. Complete descriptions of procedural evidence can be found in the 2021–2022 Technical Manual—Year-End Model (Dynamic Learning Maps Consortium, 2022).

10.1 Validity Evidence Collected in 2022–2023

The DLM program collected evidence across the four sections of the Theory of Action: design, delivery, scoring, and long-term outcomes.

10.1.1 Design

Table 10.1 shows evidence collected in 2022–2023 to evaluate the design statements and propositions in the interpretive argument. The sections following the table describe the evidence related to each statement. Given the breadth of existing evidence available, along with the lack of changes to map and Essential Element (EE) design, the DLM program did not prioritize collecting additional evidence of map structure or EEs in the 2022–2023 administration.

| Statement | Evidence collected in 2022–2023 | Chapter(s) |

|---|---|---|

| A. Map nodes and pathways accurately describe the development of knowledge and skills. | No additional evidence collected | – |

| B. Alternate content standards, the Essential Elements, provide grade-level access to college and career readiness standards. | No additional evidence collected | – |

| C. The Kite® system used to deliver DLM assessments is designed to maximize accessibility. | Accessibility support prevalence, test administrator survey | 4 |

| D. Instructionally relevant testlets are designed to allow students to demonstrate their knowledge, skills, and understandings relative to academic expectations. | Item analyses, external review ratings, test administrator survey | 3, 4 |

| E. Training strengthens educator knowledge and skills for assessing. | Description of new note-taking tool, test administrator survey responses, training data extracts | 4, 9 |

| F. Professional development strengthens educator knowledge and skills for instructing and assessing students with significant cognitive disabilities. | Professional development ratings and prevalence data | 9 |

10.1.1.1 A: Map Nodes and Pathways Accurately Describe the Development of Knowledge and Skills

Three propositions describe the accuracy of map nodes and pathways in the DLM assessment system: 1) nodes are specified at the appropriate level of granularity and are distinct from other nodes; 2) nodes for linkage levels are correctly prioritized and are adequately spaced within the breadth of the map; and 3) nodes are sequenced according to acquisition order, given population variability. While no additional evidence was collected in 2022–2023, the 2021–2022 Technical Manual describes procedural evidence from input statements in the Theory of Action, the map development process, and mini-maps. The 2021–2022 Technical Manual also describes empirical evidence from map external review ratings, modeling analyses, and alignment studies, which provide support for the statement and propositions. No changes were made to map content used for operational assessments in 2022–2023.

10.1.1.2 B: Alternate Content Standards, the Essential Elements, Provide Grade-Level Access to College and Career Readiness Standards

Four propositions describe the EEs measured by DLM assessments: 1) the grain size and description of EEs are sufficiently well-defined to communicate a clear understanding of the targeted knowledge, skills, and understandings; 2) EEs capture what students should know and be able to do at each grade level to be prepared for postsecondary opportunities, including college, career, and citizenship; 3) the collection of EEs in each grade sufficiently sample the domain; and 4) EEs have appropriately specified linkage levels measuring map nodes. While no additional evidence was collected in 2022–2023, the 2021–2022 Technical Manual describes procedural evidence from input statements in the Theory of Action, EE development and review, vertical articulation evidence, and helplet videos. The 2021–2022 Technical Manual also describes empirical evidence from external reviews, alignment studies, and postsecondary opportunity ratings that provide support for the statement and propositions. No changes were made to EEs in 2022–2023.

10.1.1.3 C: The Kite System Used to Deliver DLM Assessments is Designed to Maximize Accessibility

Four propositions describe the design and accessibility of the Kite® Suite used to deliver DLM assessments: 1) system design is consistent with accessibility guidelines; 2) supports needed by the student are available within and outside of the assessment system; 3) item types support the range of students in presentation and response; and 4) the Kite Suite is accessible and usable by educators. The 2021–2022 Technical Manual describes procedural evidence from system documentation, Accessibility and Test Administration manuals, helplet videos, and description of item types. The 2021–2022 Technical Manual also describes empirical evidence from data on prevalence of accessibility support use, test administrator surveys, focus groups, cognitive labs, and educator cadres. While there were no changes to the Kite Suite in 2022–2023, additional data from accessibility support use and test administrator survey were collected. Results were similar to findings reported in the 2021–2022 Technical Manual: system data indicate that all available accessibility supports were used, and test administrators reported that 92% of students had access to all supports necessary to participate in the assessment. The 2023 test administrator survey findings also show that educators agreed that 89% of students responded to items to the best of their knowledge, skills, and understandings, and 86% of students were able to respond regardless of disability, behavior, or health concerns. Overall, there is strong evidence supporting the propositions that the Kite Suite is accessible for students and educators.

10.1.1.4 D: Instructionally Relevant Testlets Are Designed to Allow Students to Demonstrate Their Knowledge, Skills, and Understandings Relative to Academic Expectations

Seven propositions describe the design and development of DLM testlets and items: 1) items within testlets are aligned to linkage level; 2) testlets are designed to be accessible to students; 3) testlets are designed to be engaging and instructionally relevant; 4) testlets are written at appropriate cognitive complexity for the linkage level; 5) items are free of extraneous content; 6) items do not contain content that is biased against or insensitive to subgroups of the population; and 7) items are designed to elicit consistent response patterns across different administration formats. The 2021–2022 Technical Manual cites procedural evidence from input statements in the Theory of Action, the item writing handbook, linkage level parameters, item writer qualifications, Accessibility and Test Administration manuals, and helplet videos. The 2021–2022 Technical Manual also describes empirical evidence from alignment data, item analyses and statistics, internal and external reviews, test administrator surveys, focus groups, cognitive process dimension ratings, and text complexity ratings.

In 2022–2023, new items were externally reviewed and field tested prior to promotion to the operational pool. Item analyses and updated data from the test administrator survey contributed to the evaluation of the propositions. Similar to data collected in prior years, on the 2023 test administrator survey, educators indicated that testlets aligned with instruction for approximately 70% of students in English language arts (ELA) and approximately 62% of students in mathematics. As in prior years, item statistics from 2022–2023 demonstrate that items consistently measure the linkage level, and differential item functioning analyses demonstrate that more than 99% of items have no or negligible evidence of differential functioning across student subgroups. During external review of items and testlets, the majority were rated to be accepted in both ELA and mathematics. External review of ELA texts resulted in all texts being accepted as is or after making some revisions. Overall, the evidence supports the propositions with some opportunities for additional data collection.

10.1.1.5 E: Training Strengthens Educator Knowledge and Skills for Assessing

Three propositions pertain to the required test administrator training for DLM assessments: 1) required training is designed to strengthen educator knowledge and skills for assessing; 2) training prepares educators to administer DLM assessments; and 3) required training is completed by all test administrators. The 2021–2022 Technical Manual describes procedural evidence from documentation of scope and development process for training, posttest-passing requirements, Test Administration and Accessibility manuals, description of training options, state guidance on training, and state and local monitoring. The 2021–2022 Technical Manual also describes empirical evidence from test administrator surveys and data extracts. In 2022–2023, test administrator survey data and training data extracts provided additional data to evaluate these propositions. Similar to prior years, 93% of survey test administrators agreed or strongly agreed that test administrator training prepared them for test administrator responsibilities, and data extracts show that all test administrators completed required training. In addition, a new optional note-taking tool, a feature to download slides and transcripts, and a “Helpful Reminders” document were added to the DLM training modules in 2023 to support educator engagement with the modules. These additions provided procedural evidence for the proposition that training prepares educators to administer DLM assessments. Overall, the evidence supports the propositions, with some opportunity for continuous improvement.

10.1.1.6 F: Professional Development Strengthens Educator Knowledge and Skills for Instructing Students With Significant Cognitive Disabilities

Three propositions are related to the design and structure of professional development (PD) for educators who instruct students who take DLM assessments: 1) PD modules cover topics relevant to instruction; 2) educators access PD modules; and 3) educators implement the practices on which they have been trained. The 2021–2022 Technical Manual lists procedural evidence from input statements in the Theory of Action, approach to developing PD, process for accessing PD modules, description of PD content, and list of PD modules. The 2021–2022 Technical Manual also describes empirical evidence from PD rating and prevalence data. In 2022–2023, eight states required at least one PD module as part of their required test administrator training. Across all states, 9,122 modules were completed by 2,572 new and 2,409 returning test administrators as part of the required training. In total, 6,056 modules were completed in the self-directed format from August 1, 2022, to July 31, 2023. Since the first PD module was launched in 2012, a total of 83,117 modules have been completed via the DLM PD website. As in prior years, most educators completing PD evaluation surveys agreed that the modules addressed content that is important for professionals working with students with significant cognitive disabilities, the modules presented new ideas to improve their work, and they intended to apply what they learned in the PD modules to their professional practice. Overall, the evidence supports the statement, with opportunity for collection of additional evidence.

10.1.2 Delivery

Table 10.2 shows evidence collected in 2022–2023 to evaluate the delivery statements in the interpretive argument. The sections following the table describe the evidence related to each statement.

| Statement | Evidence collected in 2022–2023 | Chapter(s) |

|---|---|---|

| G. Educators provide instruction aligned with content standards and at an appropriate level of challenge. | Test administrator survey, First Contact survey | 4 |

| H. Educators administer assessments with fidelity. | Test administrator survey, test administration observations, First Contact survey, accessibility support selections | 4, 9 |

| I. Students interact with the system to show their knowledge, skills, and understandings. | Test administrator survey, test administration observations, accessibility support selections, alternate form completion rates | 4 |

| J. The combination of administered assessments measure knowledge and skills at the appropriate depth, breadth, and complexity. | Adaptive routing patterns, linkage level parameters and item statistics, Special Circumstance code file | 3, 4, 7 |

10.1.2.1 G: Educators Provide Instruction Aligned With Content Standards and at an Appropriate Level of Challenge

Two propositions are related to the alignment of instruction with DLM content standards: 1) educators provide students with the opportunity to learn content aligned with the assessed grade-level EE; and 2) educators provide instruction at an appropriate level of challenge based on knowledge of the student. The 2021–2022 Technical Manual describes procedural evidence from input statements in the Theory of Action, the Test Administration Manual, state and local guidance, blueprints, required training, and mini-maps. The 2021–2022 Technical Manual also describes empirical evidence from the test administrator survey, First Contact survey, and focus groups.

The 2022–2023 test administrator survey data show similar results to 2021–2022 in the number of hours of instruction in DLM conceptual areas and the match of testlets to instruction. First Contact Survey data on the percentage of students demonstrating fleeting attention or little or no attention to instruction are also similar to that reported in 2021–2022. These results continue to indicate some variability in the amount of instruction and in the level of student engagement in instruction for the full breadth of academic content, with opportunity for continuous improvement.

10.1.2.2 H: Educators Administer Assessments With Fidelity

Four propositions are related to fidelity of assessment administration: 1) educators are trained to administer testlets with fidelity; 2) educators enter accurate information about administration supports; 3) educators allow students to engage with the system as independently as they are able; and 4) educators enter student responses with fidelity (where applicable). The 2021–2022 Technical Manual describes procedural evidence from input statements in the Theory of Action, Test Administration and Accessibility manuals, Testlet Information Pages, required trainings, accessibility support system functionality, and helplet videos. The 2021–2022 manual also includes empirical evidence from test administrator surveys, test administration observations, accessibility support selections, First Contact responses, and writing sample interrater agreement. The results from the 2022–2023 test administrator survey, First Contact Survey, accessibility support selections, and test administration observations are very similar to those reported in the 2021–2022 Technical Manual, showing that educators feel prepared and confident to administer DLM testlets, use manuals and/or the DLM Educator Resource Page https://dynamiclearningmaps.org/instructional-resources-ye materials, administer assessments as intended, and allow students to engage with the system as independently as they are able. While there is opportunity for additional data collection, the overall evidence supports the propositions for administration fidelity.

10.1.2.3 I: Students Interact With the System to Show Their Knowledge, Skills, and Understandings

Three propositions are related to students interacting with the system to show their knowledge, skills, and understandings: 1) students are able to respond to tasks, regardless of sensory, mobility, health, communication, or behavioral constraints; 2) student responses to items reflect their knowledge, skills, and understandings; and 3) students are able to interact with the system as intended. The 2021–2022 Technical Manual describes procedural evidence from input statements in the Theory of Action, and the Test Administration and Accessibility manuals. The 2021–2022 manual also describes empirical evidence from test administrator surveys, accessibility support data, alternate form completion rates, test administration observations, focus groups, and cognitive labs.

Data collected in 2022–2023 were similar to prior years. Approximately 89% of administrators agreed or strongly agreed that students responded to items to the best of their knowledge, skills, and understanding. Additionally, a high percentage of administrators agreed or strongly agreed that students were able to respond regardless of disability, behavior, or health concerns (86%) and had access to all necessary supports to participate (92%). In 2022–2023, alternate forms for students with blindness or low vision were selected for 2,086 students (2%), and uncontracted braille was selected for 103 students (0.1%). Data from accessibility support selections and test administration observations showed that accessibility supports were widely used (>99% of students had at least one support), and in 97 of 101 observations, administrators were able to deliver assessments without experiencing difficulty using accessibility supports (96%). Overall, the combined evidence supports the statement that students interact with the system to demonstrate their understanding.

10.1.2.4 J: The Combination of Administered Assessments Measure Knowledge and Skills at the Appropriate Depth, Breadth, and Complexity

Three propositions are related to the depth, breadth, and complexity of administered assessments: 1) the First Contact survey correctly assigns students to the appropriate complexity band; 2) administered testlets are at the appropriate linkage level; and 3) administered testlets cover the full blueprint. The 2021–2022 Technical Manual describes procedural evidence from input statements in the Theory of Action, the description of the First Contact survey design and algorithm development, helplet videos, description of testlet and EE selection procedures, the Instruction and Assessment Planner, and monitoring extracts. The 2021–2022 manual also describes empirical evidence from pilot analyses, adaptive routing patterns, linkage level parameters and item statistics, focus groups, blueprint coverage extracts and analyses, and Special Circumstance codes.

Data from 2022–2023 provide additional evidence that the combination of testlets appropriately measure student knowledge and skills. Linkage level modeling parameters (i.e., probability of masters and nonmasters providing correct responses, base rate of probability) and item statistics (i.e., p-values and standardized difference values) show that students perform as expected on assessed linkage levels, providing some evidence that administered testlets are at the appropriate level. Data on adaptive routing patterns show that testlets did not adapt to a higher linkage level after the first assigned testlet for the majority of students at the Foundational Complexity Band. Similar to prior years’ data, adaptation patterns are more variable for students at higher complexity bands. Overall, the evidence supports the statement that the combination of testlets appropriately measure student knowledge and skills.

10.1.3 Scoring

Table 10.3 shows evidence collected in 2022–2023 to evaluate the scoring statements in the interpretive argument. The sections following the table describe the evidence related to each statement. Given current evidence available and the lack of changes to scoring and reporting, the DLM program did not prioritize collecting additional evidence for use of results for instructional decision-making in 2022–2023.

| Statement | Evidence collected in 2022–2023 | Chapter(s) |

|---|---|---|

| K. Mastery results indicate what students know and can do. | Model fit, model parameters, relationship of mastery results to teacher ratings, reliability results | 5, 7, 8 |

| L. Results indicate summative performance relative to alternate achievement standards. | Performance distributions, reliability results | 7, 8 |

| M. Results can be used for instructional decision-making. | No additional evidence collected | – |

10.1.3.1 K: Mastery Results Indicate What Students Know and Can Do

There are three propositions corresponding to mastery results accurately indicating what students know and can do: 1) mastery status reflects students’ knowledge, skills, and understandings; 2) linkage level mastery classifications are reliable; and 3) mastery results are consistent with other measures of student knowledge, skills, and understandings. The 2021–2022 Technical Manual includes procedural evidence from input statements in the Theory of Action and documentation of mastery results and the scoring method, model fit procedures, the reliability method, and mastery reporting. The 2021–2022 Technical Manual also describes empirical evidence from model fit, model parameters, reliability results, and analyses on the relationship between mastery results and First Contact ratings. As in prior years, 2022–2023 model parameters and reliability results show acceptable levels of absolute model fit and classification accuracy. Additional data were collected in 2022–2023 to examine relationships between educators’ ratings of student mastery and mastery calculated from DLM assessments. The findings show a significant association between educator ratings and DLM scoring of the highest linkage level mastered with a medium effect size. Together, the evidence supports the statement that mastery results indicate what students know and can do.

10.1.3.2 L: Results Indicate Summative Performance Relative to Alternate Achievement Standards

Three propositions are related to the accuracy and reliability of summative performance relative to alternate achievement standards: 1) performance levels provide meaningful differentiation of student achievement; 2) performance level determinations are reliable; and 3) performance level results are useful for communicating summative achievement in the subject to a variety of audiences. The 2021–2022 Technical Manual describes procedural evidence from input statements in the Theory of Action, the standard setting procedure, grade- and subject-specific performance level descriptors, description of reliability method, intended uses documentation, Performance Profile development, and General Research Files. The 2021–2022 Technical Manual also cites empirical evidence from standard setting survey data, performance distributions, reliability analyses, interviews, focus groups, and governance board feedback. In 2023, results for each subject demonstrate that student achievement was distributed across the four performance levels. As in prior years, reliability results were high (e.g., polychoric correlations ranging from .860 to .940). These results indicate the DLM scoring procedure of assigning and reporting performance levels based on total linkage levels mastered produced reliable performance level determinations. Together, the evidence supports the propositions for the accuracy and reliability of summative performance relative to alternate achievement standards.

10.1.3.3 M: Results Can Be Used for Instructional Decision-Making

Three propositions are related to instructional use of results for instructional planning, monitoring, and adjustment: 1) score reports are appropriately fine-grained; 2) score reports are instructionally relevant and provide useful information for educators; and 3) educators can use results to communicate with parents about instructional plans and goal setting. While no additional evidence was summarized in the 2022–2023 Technical Manual, the 2021–2022 Technical Manual describes procedural evidence from input statements in the Theory of Action, the score report development process, optional use of the Instruction and Assessment Planner, scoring and reporting ad hoc committee, and score report interpretive guides. The 2021–2022 Technical Manual also describes empirical evidence from interview data, educator cadres, and the test administrator survey that provide support for the statement and propositions.

10.1.4 Long-Term Outcomes

Table 10.4 shows evidence collected in 2022–2023 to evaluate the DLM long-term outcomes. The sections following the table describe the evidence related to each statement.

| Statement | Evidence collected in 2022–2023 | Chapter(s) |

|---|---|---|

| N. State and district education agencies use results for monitoring and resource allocation. | No additional sources of evidence; continued delivery of state and district reporting | 7 |

| O. Educators make instructional decisions based on data. | No additional sources of evidence; updated manuals and interpretive guides, continued use of helplet videos and optional Instruction and Assessment Planner | 4, 7 |

| P. Educators have high expectations. | Test administrator survey | 3 |

| Q. Students make progress toward higher expectations. | No additional sources of evidence; educators continued making selections in optional Instruction and Assessment Planner | 4, 7 |

10.1.4.1 N: State and District Education Agencies Use Results for Monitoring and Resource Allocation

There is one proposition for state and district education agencies’ use of assessment results: district and state education agency staff use aggregated information to evaluate programs and adjust resources. The 2021–2022 Technical Manual describes procedural evidence from input statements in the Theory of Action, state and district reports, resources, and guidance provided to support use of aggregated results. The DLM program continued to make state- and district-level reporting and associated guidance available in 2022–2023. Due to variation in policy and practice around use of DLM results for program evaluation and resource allocation within and across states, the DLM program presently relies on states to collect their own evidence for this proposition.

10.1.4.2 O: Educators Make Instructional Decisions Based on Data

There are three propositions corresponding to educators making sound instructional decisions based on data from DLM assessments: 1) educators are trained to use assessment results to inform instruction; 2) educators use assessment results to inform subsequent instruction, including in the subsequent academic year; and 3) educators reflect on their instructional decisions. While no additional sources of evidence were identified in 2022–2023, the 2021–2022 Technical Manual describes procedural evidence from input statements in the Theory of Action, the Test Administration Manual, interpretive guides, state and local guidance, score report helplet videos, and the optional Instruction and Assessment Planner. The 2021–2022 Technical Manual also describes empirical evidence from video review feedback and use rates, focus groups, and the test administrator survey. Administration manuals and interpretive guides were updated for 2022–2023, and educators continued to use the helplet videos and the Planner. Combined with prior evidence, there is support for the statement and propositions, with opportunity for additional data collection.

10.1.4.3 P: Educators Have High Expectations

Two propositions correspond to educators’ expectations for students taking DLM assessments: 1) educators believe students can attain high expectations; and 2) educators hold their students to high expectations. The 2021–2022 Technical Manual describes procedural evidence from input statements in the Theory of Action and empirical evidence from test administrator and skill mastery surveys, as well as focus groups. Spring 2023 survey responses describe educators’ perceptions of the academic content in the DLM assessments. In 2023, educators indicated that content reflected high expectations for 86% of students, which reflects a consistent annual increase since 2015, when it was 72%. Educators also indicated that testlet content measured important academic skills for 78% of students, which similarly reflects a consistent annual increase since 2015, when it was 50%. These data suggest that educator responses may reflect the awareness that the DLM assessments contain challenging content but show slightly more division on its importance. Together, the evidence suggests that there has been some progress toward this long-term outcome, with opportunities for additional data collection.

10.1.4.4 Q: Students Make Progress Toward Higher Expectations

There are three propositions pertaining to students making progress toward higher expectations: 1) alternate achievement standards are vertically articulated; 2) students who meet alternate achievement standards are on track to pursue postsecondary opportunities; and 3) students demonstrate progress toward higher expectations. While no additional sources of evidence were identified in 2022–2023, the 2021–2022 Technical Manual describes procedural evidence from input statements in the Theory of Action, vertical articulation, grade- and subject-specific performance level descriptors and development process, postsecondary opportunity panels, and the optional use of the Instruction and Assessment Planner. The 2021–2022 Technical Manual also describes empirical evidence from review of performance level descriptors, postsecondary opportunity panels, test administrator surveys, system data, and focus groups that support this long-term outcome. In 2022–2023, educators continued making selections in the optional Planner. While there is some evidence of students making progress over time, additional evidence is needed to evaluate student progress toward higher expectations.

10.2 Evaluation Summary

In the three-tiered validity argument approach, we evaluate the extent to which statements in the Theory of Action are supported by the underlying propositions. Propositions are evaluated by the set of procedural and empirical evidence collected through 2022–2023. Table 10.5 summarizes the overall evaluation of the extent to which each statement in the Theory of Action is supported by the underlying propositions and associated evidence. We describe evidence according to its strength. We consider evidence for a proposition to be strong if the amount of evidence is sufficient, it includes both procedural and empirical evidence, and it is not likely to be explained by an alternative hypothesis. We consider the evidence for a proposition to be moderate if it includes only procedural evidence and/or if there is some likelihood that the evidence can be explained by an alternative hypothesis. We also note where current evidence is limited or additional evidence could be prioritized in future years.

| Statement | Overall evaluation |

|---|---|

| A. Map nodes and pathways accurately describe the development of knowledge and skills. | There is collectively strong evidence that nodes are specified at the appropriate granularity, based on descriptions of the node development process and external review ratings. There is moderate evidence that nodes were correctly prioritized for linkage levels, based on description of the map development process, including rounds of internal review. There is strong evidence that nodes are correctly sequenced, based on the description of the procedures for specifying node connections and external review ratings, and indirect evidence from modeling analyses and alignment data for the correct ordering of linkage levels. Collectively, the propositions support the statement that map nodes and pathways accurately describe the development of knowledge and skills. |

| B. Alternate content standards, the Essential Elements (EEs), provide grade-level access to college and career readiness standards. | There is evidence that the granularity and description of EEs are sufficiently well-defined to communicate a clear understanding of the targeted knowledge, skills, and understandings, based on the development process and state and content expert review. There is strong evidence that EEs capture what students should know and be able to do at each grade to be prepared for postsecondary opportunities—including college, career, and citizenship—based on the development process, alignment studies, vertical articulation evidence, and indirect evidence from the postsecondary opportunities study. There is evidence that the EEs in each grade sufficiently sample the domain, based on the development process for EEs and blueprints prior to their adoption. There is strong evidence that EEs are accurately aligned to nodes in the learning maps, based on documentation of the simultaneous development process, external review ratings, and alignment study data. Collectively, the propositions support the statement that the EEs provide grade-level access to college and career readiness standards. |

| C. The Kite system used to deliver DLM assessments is designed to maximize accessibility. | There is evidence that system design is consistent with accessibility guidelines and contemporary code. There is strong evidence that supports needed by the student are available within and outside of the assessment system, based on system documentation, accessibility support prevalence data, test administrator survey responses, and focus groups. There is evidence that item types support the range of students in presentation and response, based on cognitive labs and test administrator survey responses. There is also evidence that the Kite Suite is accessible to educators, based on test administrator survey responses and focus group findings. Collectively, the propositions support the statement that the Kite Suite is designed to maximize accessibility. |

| D. Instructionally relevant testlets are designed to allow students to demonstrate their knowledge, skills, and understandings relative to academic expectations. | There is strong evidence that items within testlets are aligned to linkage levels, based on test development procedures, alignment data, and item analyses. There is generally strong evidence that testlets are designed to be accessible to students, based on test development procedures and evidence from external review, test administrator survey responses, and focus groups. There is evidence that testlets are designed to be engaging and instructionally relevant, with some opportunity for improvement, based on test development procedures and focus group feedback. There is strong evidence that testlets are written at appropriate cognitive complexity for the linkage level and that items are free of extraneous content. There is strong evidence from item analysis and differential item functioning analyses that items do not contain content that is biased against or insensitive to subgroups of the population. There is some evidence that items elicit consistent response patterns across different administration formats, with opportunity for additional data collection. Together, the propositions support the statement that instructionally relevant testlets are designed to allow students to demonstrate their knowledge, skills, and understandings relative to academic expectations. |

| E. Training strengthens educator knowledge and skills for assessing. | There is strong evidence that required training is designed to strengthen educator knowledge and skills for assessing, based on the documentation of the scope of training and passing requirements and new resources, including an optional note-taking tool. There is some evidence that required training prepares educators to administer DLM assessments, based on survey responses, with opportunities for continuous improvement. There is strong evidence that required training is completed by all test administrators, based on Kite training status data files, state and local monitoring, and data extracts. Together, the propositions support the statement that training strengthens educator knowledge and skills for assessing. |

| F. Professional development strengthens educator knowledge and skills for instructing and assessing students with significant cognitive disabilities. | There is strong evidence that professional development covers topics relevant to instruction, based on the list of modules and educators’ rating of the content. There is some evidence that educators access the professional development modules, based on the module completion data. There is some evidence that educators implement the practices on which they have been trained, but overall use of professional development modules is low. While there is opportunity for continuous improvement in the use of professional development modules and their application to instructional practice, when propositions are fulfilled (i.e., professional development is used), professional development strengthens educator knowledge and skills for instructing students with significant cognitive disabilities. |

| G. Educators provide instruction aligned with EEs and at an appropriate level of challenge. | Evidence that educators provide students the opportunity to learn content aligned with the grade-level EEs shows variable results, based on responses to the test administrator survey and First Contact survey. There is some evidence that educators provide instruction at an appropriate level of challenge using their knowledge of the student, based on test administrator survey responses and focus groups. However, there is also some evidence that some students may not be receiving instruction aligned with the full breadth of academic content measured by the DLM assessment as evidenced by the test administrator survey. There is opportunity to collect additional data on instructional practice. Together, the propositions provide some support that educators provide instruction aligned with EEs and that this instruction is provided at an appropriate level of challenge. |

| H. Educators administer assessments with fidelity. | There is strong evidence that educators are trained to administer testlets with fidelity, based on training documentation, test administrator survey responses, and test administration observations. Documentation describes entry of accessibility supports that is supported by prevalence data from the system, but there is no current evidence available to evaluate the accuracy of accessibility supports enabled for students or their consistency with supports used during instruction. There is some evidence that educators allow students to engage with the system as independently as they are able, based on test administration observations, test administrator survey responses, and accessibility support data, with the opportunity to collect additional data. There is evidence that educators enter student responses with fidelity, based on test administration observations and writing interrater agreement studies. Overall, available evidence for the propositions indicates that educators administer assessments with fidelity, with opportunity for additional data collection. |

| I. Students interact with the system to show their knowledge, skills, and understandings. | There is evidence that students can respond to tasks regardless of sensory, mobility, health, communication, or behavioral constraints, based on test administrator survey responses, accessibility support selection data, test administration observations, and focus groups. There is evidence from cognitive labs, test administrator survey responses, and test administration observations that student responses to items reflect their knowledge, skills, and understandings and that students can interact with the system as intended. Evidence for the propositions collectively demonstrates that students interact with the system to show their knowledge, skills, and understandings. |

| J. The combination of administered testlets measure knowledge and skills at the appropriate depth, breadth, and complexity. | There is generally strong evidence that the First Contact survey correctly assigns students to complexity bands, based on pilot analyses, with the opportunity for additional research. There is strong evidence that administered testlets cover the full blueprint based on blueprint coverage data and Special Circumstance codes. There is also generally strong evidence that administered testlets are at the appropriate linkage level, with the opportunity to collect additional data about system adaptation. Overall, the propositions moderately support the statement that the combination of administered testlets is at the appropriate breadth and complexity. |

| K. Mastery results indicate what students know and can do. | There is strong evidence that mastery status reflects students’ knowledge, skills, and understandings, based on modeling evidence, and there is strong evidence that linkage level mastery classifications are reliable based on reliability analyses. There is also evidence that mastery results are consistent with other measures of student knowledge, skills, and understandings from First Contact survey responses and educator ratings of student mastery. Overall, the propositions support the statement that mastery results indicate what students know and can do. |

| L. Results indicate summative performance relative to alternate achievement standards. | There is strong evidence that performance levels meaningfully differentiate student achievement, based on the standard setting procedure, standard setting survey data, and performance distributions. There is strong evidence that performance level determinations are reliable, based on documentation and reliability analyses. There is also evidence from interviews, focus groups, and governance board feedback that performance level results are useful for communicating summative achievement in the subject to a variety of audiences. Collectively, the evidence supports the statement that results indicate summative performance relative to alternate achievement standards. |

| M. Results can be used for instructional decision-making. | There is strong evidence that reports are fine-grained, based on documentation of score report development and on interview and focus group data. There is evidence that score reports are instructionally relevant and useful and that they provide relevant information for educators, based on documentation of the report development process, interview, and test administrator survey responses. There is evidence of variability in training on how educators can use results to inform instruction, based on training content, educator cadre feedback, and focus groups, with opportunity to collect additional data. There is evidence that educators can use results to inform instructional choices and goal setting, based on documentation of the report development, interview data, focus groups, and test administrator survey responses. There is evidence that educators can use results to communicate with parents, but there is variability in actual practice, with opportunity for additional data collection. Overall, the propositions support results being useful for instructional decision-making. |

| N. State and district education agencies use results for monitoring and resource allocation. | There is some procedural evidence that district and state staff use aggregated information to evaluate programs and adjust resources. Because states and district policies vary regarding how results should be used for monitoring and resource allocation, states are responsible for collecting their own evidence for this proposition. Additional evidence could be collected from across state education agencies. |

| O. Educators make instructional decisions based on data. | There is evidence that some educators are trained to use assessment results to inform instruction, based on available resources, video use rates and feedback, and focus group feedback, with variability in use. There is some evidence that educators use assessment results to inform instruction, based on feedback from focus groups and the test administrator survey, but local program implementation and score report delivery practices may hinder use of summative score reports for informing instructional practice in the subsequent academic year. There is some evidence that educators reflect on their instructional decisions, based on focus group feedback. Evidence for each proposition provides some support for the statement that some educators make instructional decisions based on data, but variability indicates that not all educators receive training or use data to inform instruction. Additional evidence collection and continuous improvement in implementation would strengthen the propositions and provide greater support for this long-term outcome of the system. |

| P. Educators have high expectations. | There is some evidence that educators believe students can attain high expectations and hold their students to high expectations. Survey responses, focus groups, and system data show variability in educator perspectives. There is opportunity for continued data collection of educators’ understanding of high expectations as defined in the DLM system. Presently, the propositions provide some support for the statement that educators have high expectations for their students, with opportunity for continued improvement. |

| Q. Students make progress toward higher expectations. | There is strong evidence that the alternate achievement standards are vertically articulated, based on the performance level descriptor development process and postsecondary opportunities vertical articulation argument. There is strong evidence, based on the postsecondary opportunities alignment evidence, that students who meet alternate achievement standards are on track to pursue postsecondary opportunities. However, there is a large percentage of students who do not yet demonstrate proficiency on DLM assessments. There is some evidence showing students make progress toward higher expectations based on input from prior Theory of Action statements, focus group data, and system data. There are complexities in reporting growth for DLM assessments, and evaluating within-year progress relies on educator use of optional instructionally embedded assessments, which to date is low. Evidence collected for the propositions to date provides some support for the statement that students make progress toward higher expectations. |

10.3 Conclusion

The DLM program is committed to continuous improvement of assessments, educator and student experiences, and technological delivery of the assessment system. As described in Chapter 1, the DLM Theory of Action guides ongoing research, development, and continuous improvement to achieve intended long-term outcomes. While statements in the Theory of Action are largely supported by existing evidence, the annual evaluation of evidence identifies areas for future research, specifically where current evidence is moderate or limited. The DLM Governance Board will continue to collaborate on additional data collection as needed. Future studies will be guided by advice from the DLM Technical Advisory Committee, using processes established over the life of the DLM system.